tl;dr: Docker's default bind mount performance for projects requiring lots of I/O on macOS is abysmal. It's acceptable (but still very slow) if you use the

cachedordelegatedoption. But it's actually fairly performant using the barely-documented NFS option!July 2020 Update: Docker for Mac may soon offer built-in Mutagen sync via the

:delegatedsync option, and I did some benchmarking here. Hopefully that feature makes it to the standard Docker for Mac version soon.September 2020 Update: Alas, Docker for Mac will not be getting built-in Mutagen support at this time. So, read on.

Ever since Docker for Mac was released, shared volume performance has been a major pain point. It was painfully slow, and the community finally got a cached mode that offered a 20-30x speedup for common disk access patterns around 2017. Since then, the File system performance improvements issue has been a common place to gripe about the lack of improvements to the underlying osxfs filesystem.

Since around 2016, support has been around (albeit barely documented) for NFS volumes in Docker (see Docker local volume driver-specific options).

As part of my site migration project, I've been testing different local development environments, and as a subset of that testing, I decided to test different volume/sync options for Docker to see what's the fastest—and easiest—to configure and maintain.

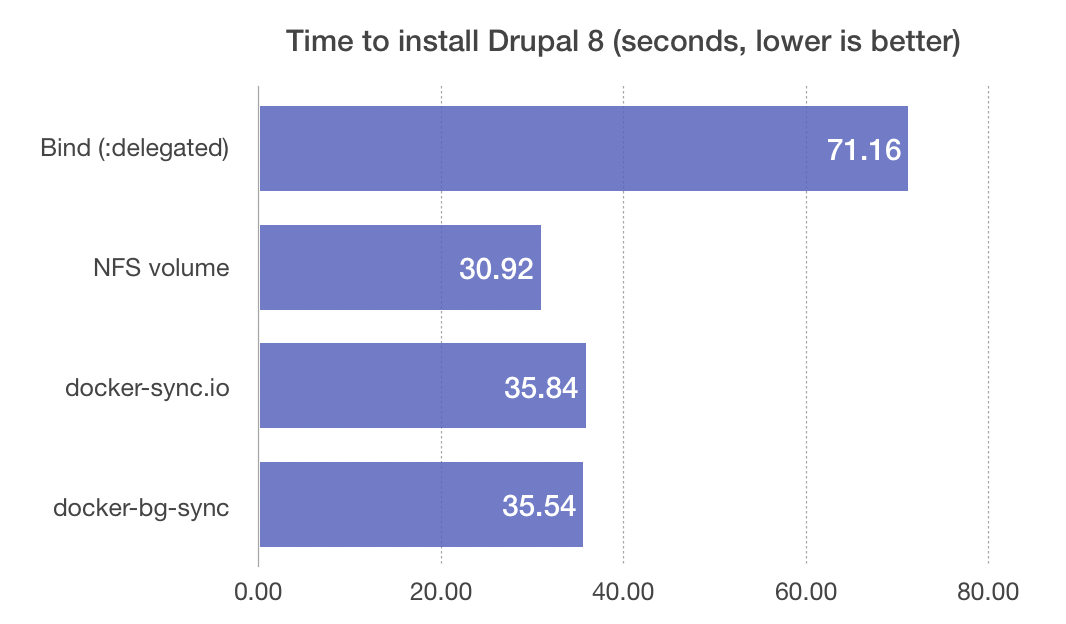

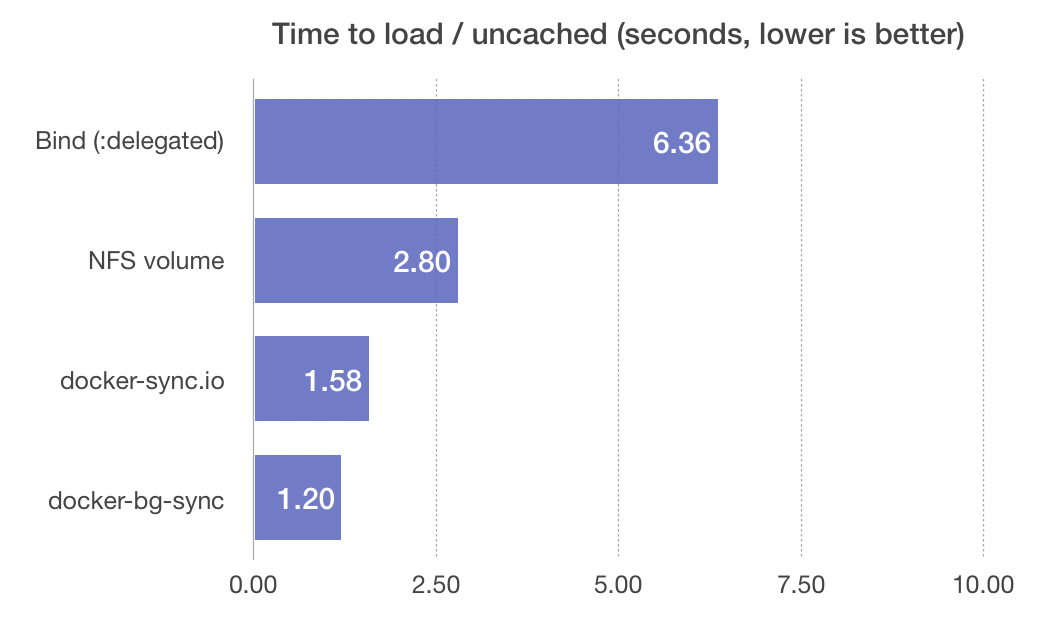

Before I drone on any further, here are some benchmarks:

Benchmarks explained

The first benchmark installs Drupal, using the JeffGeerling.com codebase. The operation requires loading thousands of code files from the shared volume, writes a number of files back to the filesystem (code, generated templates, and some media assets), and does a decent amount of database work. The database is stored on a separate Docker volume, and not shared, so it is plenty fast on its own (and doesn't affect the results).

The second benchmark loads the home page (/) immediately after the installation; this page load is entirely uncached, so Drupal again reads all the thousands of files from the filesystem and loads them into PHP's opcache, then finishes its operations.

Both benchmarks were run four times, and nothing else was open on my 2016 MacBook Pro while running the benchmarks.

Using the different sync methods

NFS

To use NFS, I had to do the following (note: this was on macOS Catalina—other systems and macOS major versions may require modifications):

I edited my Mac's NFS exports file (which was initially empty):

sudo nano /etc/exports

I added the following line (to allow sharing any directories in the Home directory—under older macOS versions, this would be /Users instead):

/System/Volumes/Data -alldirs -mapall=501:20 localhost

(When I saved the file macOS popped a permissions prompt which I had to accept to allow Terminal access to write to this file.)

I also edited my NFS config file:

sudo nano /etc/nfs.conf

I added the following line (to tell the NFS daemon to allow connections from any port—this is required otherwise Docker's NFS connections may be blocked):

nfs.server.mount.require_resv_port = 0

Then I restarted nfsd so the changes would take effect:

sudo nfsd restart

Then, to make sure my Docker Compose service could use an NFS-mounted volume, I added the following to my docker-compose.yml:

---

version: '3'

services:

drupal:

[...]

volumes:

- 'nfsmount:/var/www/html'

volumes:

nfsmount:

driver: local

driver_opts:

type: nfs

o: addr=host.docker.internal,rw,nolock,hard,nointr,nfsvers=3

device: ":${PWD}"

Note that I have my project in ~/Sites, which is covered under the /System/Volumes/Data umbrella... for older macOS versions you would use /Users instead, and for locations outside of your home directory, you have to grant 'Full Disk Access' in the privacy system preference pane to nfsd.

Some of this info I picked up from this gist and it's comments, especially the comment from egobude about the changes required for Catalina.

So, for NFS, there are a few annoying steps, like having to manually add an entry to your /etc/exports, modify the NFS configuration, and restart NFS. But at least on macOS, everything is built-in, and you don't have to install anything extra, or run any extra containers to be able to get the performance benefit.

docker-sync.io

docker-sync is a Ruby gem (installed via gem install docker-sync) which requires an additional configuration file (docker-sync.yml) alongside your docker-compose.yml file, which then requires you to start docker-sync prior to starting your docker-compose setup. It also ships with extra wrapper functions that can do it all for you, but overall, it felt a bit annoying to have to manage a 2nd tool on top of Docker itself in order to get syncing working.

It also took almost two minutes (with CPU at full bore) the first time I started the environment for an initial sync of all my local files into the volume docker-sync created that was mounted into my Drupal container.

It was faster for most operations (sometimes by 2x) than NFS (which was 2-3x faster than :cached/:delegated), but for some reason the initial Drupal install was actually a few seconds slower than NFS. Not sure the reason, but might have to do with the way unison sync works.

docker bg-sync

bg-sync is a container that syncs files between two directories. For my Drupal site, since there are almost 40,000 files (I know... that's Drupal for you), I had to give this container privileged access (which I'm leery of doing in general, even though I trust bg-sync's maintainer).

It works with a volume shared from your Mac to it, then it syncs the data from there into your destination container using a separate (faster) local volume. The configuration is a little clunky (IMO), and requires some differences between Compose v2 and v3 formats, but it felt a little cleaner to manage than docker-sync, because I didn't have to install a rubygem and start a separate process—instead, all the configuration is managed inside my docker-compose.yml file.

bg-sync offered around the same performance as docker-sync (they both use the same unison-based sync method, so that's not a surprise), though for some reason, the initial sync took closer to three minutes, which was a bit annoying.

Summary

I wanted to write this post after spending a few hours testing all these different volume mount and sync tools, because so many of the guides I've found online are either written for older macOS versions or are otherwise unreliable.

In the end, I've decided to stick to using an NFS volume for my personal project, because it offers nearly native performance (certainly a major improvement over the Docker for Mac osxfs filesystem), is not difficult to configure, and doesn't require any extra utilities or major configuration changes in my project.

What about Linux?

I'm glad you asked! I use the exact same Docker Compose config for Linux—all the NFS configuration is stored in a docker-compose.override.yml file I use for my Mac. For Linux, since normal bind mounts offer native performance already (Docker for Linux doesn't use a slow translation layer like osxfs on macOS), I have a separate docker-compose.override.yml file which configures a standard shared volume.

And in production, I bake my complete Docker image (with the codebase inside the image)—I don't use a shared volume at all.

Comments

Can you share native Linux performance timings for full transparency? If nothing else such details could new developers better gauge what development platform they choose to better maximize their productivity.

Good idea! I'll try to get that done in a revision to this post. Just didn't think of it in the initial benchmarking.

Note that even if a task takes twice as long, if it's not the major barrier to finishing a project (even if repeated a thousand times), then the tool or platform's raw performance might not be the most important measure. Just like picking file copy benchmarks over real world Drupal benchmarks would be insufficient for making a good decision for file sharing.

There's a lot of other things besides docker file system performance that I consider when choosing a development platform—and my decision may have a lot of other factors than the next person, too!

"Note that even if a task takes twice as long, if it's not the major barrier to finishing a project (even if repeated a thousand times), then the tool or platform's raw performance might not be the most important measure."

I agree with this. Too often is it overlooked that a developer's efficiency can be partially (or largely) impacted by the Operating System they work in. Forcing a user that works better in Mac OS to use Linux or Windows would impact their performance. It could also degrade their work performance due to frustration, dislike, or unfamiliarity with the OS "forced" on them. Unless the performance difference impairs work progress, it should not be a reason to NOT use Mac OS for development.

In contrast, it should also NOT be a reason to forcing developers into using Mac OS over Windows or Linux..... especially since those OSes are built with Tooling specifically suited for Docker/Cloud development. Something Mac OS CAN do, but largely only with unofficial ports of software installed

Very true; on a well-functioning team anyone should use their preferred tools to get the job done. If you go to a construction job site, it's sometimes nice to have everyone using the DeWalt or Milwaukee system of battery tools so they can share chargers and batteries—but as long as a person's tools aren't holding back the team (e.g. "I have to unplug the charger everyone else is using so I can charge my batteries"), it's not an issue. Especially if that particular worker is way more efficient with the more specialized tools.

The gist there restarts Docker. Did you?

The

open -a Dockercommand just opens Docker (if it's not already open)... that doesn't actually trigger a restart, though (from my quick test) if it's actually running. I'm guessing that was more just a convenience to make sure Docker is running at the end of the script? Note that for one-off things like this, I don't like running a script to modify a couple system settings (which is why I did it by hand).Hi, this should also work with Catalina right?

Sorry I didn't read that you used catalina.

I followed the steps but when I try to connect to my web the browser I'm having the error ERR_CONNECTION_REFUSED

If you're using NFS that shouldn't be causing any issues with browser connections. If you're trying to run Drupal or some other web application, then maybe it's not responding correctly, or there's a different connection issue (e.g. you're trying to connect via https while the docker setup is only accepting http connections, or there's an invalid certificate...).

I solved the problem by granting nfsd the 'Full Disk Access' even though my docker files are under /System/Volumes/Data.

Now it works and it's blazing fast :D

Thanks a lot for sharing this :)

A couple of years back I spent so much time dealing with all of this :(

I finally gave up to the idea of having Docker to run natively on macOS. I repurposed a Mac Mini as a headless Linux box (running Ubuntu Server) and installed Docker there. I still use NFS to share codebases as necessary from my main workstation, and use native docker and docker-compose clients to interact with the remote docker server, which gives me the best of both worlds (not without a few quirks).

I wanted to note that after a macOS upgrade today, I got the following error bringing up the Docker environment:

Creating jeffgeerling-com ... error

ERROR: for jeffgeerling-com Cannot create container for service drupal: failed to mount local volume: mount :/Users/jge

Creating drupal-mysql ... done

rd,nointr,nfsvers=3: permission denied

ERROR: for drupal Cannot create container for service drupal: failed to mount local volume: mount :/Users/jgeerling/Sites/jeffgeerling-com:/var/lib/docker/volumes/jeffgeerling-com_nfsmount/_data, data: addr=192.168.65.2,nolock,hard,nointr,nfsvers=3: permission denied

ERROR: Encountered errors while bringing up the project.

The fix was to simply restart nfsd again:

Hey Jeff -- thanks for this writeup! I'm wondering -- do you happen to know if using FileVault encryption (or not) has any meaningful effect (assuming SSD and fairly recent processor)? The Google suggests a couple of folks have seen issues, but I couldn't find much info one way or the other.

I haven't had an issue; I've always had FileVault full disk encryption on and on any recent Mac with SSD, it's not really a noticeable change, at least in my non-scientific opinion.

You should note that docker-sync is very unstable, stops synching files for "no reason" and you don't even know it. If you are using big drupal projects on day to day basis, it is hell to work with that.

Hi Jeff. After following the steps, I still get

php_1 | sudo: setrlimit(RLIMIT_CORE): Operation not permittedphp_1 | chown: /var/www/html: Operation not permitted

I know you don't have time to understand the intricacies of my local setup might contain, but could you let me know if you have any debugging techniques that might help determine what is happening?

Thanks

Thank you for this! I tried it and I'm experiencing some weird issues. Im using a Docker setup in Mojave, Apache, PHP-FPM setup. All aggregations and opcache are disabled. All other files are updating correctly and fast but my CSS and JS changes aren't working or updating. Any ideas on this?

Thank you very much.

I've been struggling with docker performances but I couldn't find a comprehensive explanation (or, rather, how-to) on how to effectively use nfs in the middle of several projects that didn't leave me much time to think about it.

Thanks to you I've been able to understand (more or less) and quickly change my way of sharing folders and the very poor performances on some big applications now behave decently, and that is a huge relief.

Thanks you very much

You're welcome, that's why I like to blog about these things!

Update : Turns out this is a still problematicly slow in a Laravel project, on a page with ~150 views called.

A page that takes about 500ms on a linux docker setup (with very little resouces available) takes about 45 secondes on OSX, with NFS.

At this point I don't know if OSX and docker is a solution at all for php/laravel projects, because there's only so much code cleanliness I'm willing to sacrifice for performances.

Hey, thanks for taking the time to test that!

Upon reading your article I've actually tried it and I found out this approach has one serious problem - permissions. In my setup I need a predictable UID and it's not possible to squash the permissions on the mount side. My superficial investigation indicates that it's probably because docker is actually mounting the volume in overlayfs. Docker for Mac uses ext4 for its persistent file system which should support the perms squash, if only it was easy to change the mountpoint. Seems it would require plug-ins which are not that straightforward to set up a Mac. I need simplicity because the setup will be used by tens of people daily. My experience dictates that anything that could go wrong will go wrong countless times requiring lots of support time. I'm not giving up yet though.

Really interesting write-up, and some solid testing!

I've worked on a Drupal 8 site using Docker on macOS for about two years now. Our team has always struggled with the volume mounts setup & performance, so was excited to test out your NFS solution.

Unfortunately I haven't seen the same improvements in my own benchmarks. The tests I used are a bit different, but represent some of the slowest operations that we do on a daily basis. Particularly nuking & reinstalling all of our dependencies, although extreme, is something we end up doing a lot of, as we switch between branches with various module & core versions. NFS is a bit faster for some operations but a bit slower for others.

Here are my test results. (all tests run a few times, times averaged)

composer installwith a completely emptyvendordirectory, which will install Drupal core & all of our contrib modules:delegated: 5 min 01 secyarn installwith a completely emptynode_modulesdirectory:delegated: 1 min 52 secdrush crdelegated: 16.564 secdelegated: 770 msecI compared the NFS mount to a default

delegatedmount so that I was testing in a similar way to how you were, and because adelegatedmount is a pretty standard way to get a project set up.But that's actually not what we've been working with for the past few months.

Our current setup involves creating container-specific volumes for the subdirectories that contain thousands of files, like so:

The obvious downside is that we're explicitly NOT syncing these folders between the container and the host. It turns out that that doesn't really matter. All of that code runs on the container, and we're not likely to be modifying the internals of Drupal core or our composer dependencies on a daily basis.

And this approach has a few other downsides:

composer installandyarn installon the host as well as the container. Otherwise the host's folders will be empty or out of date.web/coreif we're upgrading/downgrading core, because it's a volume mount. The workaround is torm -rf web/core/*-- then it won't try and delete the folder.vendorfolder back to some earlier revision, for example. I suspect this is because we're mounting folders over the top of each other - first everything in the current directory, which includes e.g.vendor, and then trying to overwritevendorwith a different Docker volume. I haven't seen documentation for this approach, so I suspect that it works by chance and its behaviour is not strictly defined. When this happens, we can nuke & reinstall our dependencies to get things working again, but performance slows back down to the level as if none of our special subdirectories had their own volumes.So far we've stuck with this solution despite its flaws, because the performance is great! It's the closest we've been able to get to native performance:

composer installin 52.785 sec,yarn installin 43.011 sec,drush crin 6.525 sec, and uncached home page loads in 611 msec.But it still just feels... a bit dodgy. If only it all just worked, right?! 🤷♂️

Hey there, well i am not using Drupal, but am working on an enterprise-grade Symfony project, so i feel the same pain. My team of 35 uses all Mac-s, and "normal" pageload times for linux are ~10s... So, Docker for Mac was never an option really. (40+ sec)

I have, over time, tested all of these methods, and more, and haven't ever made the decision to pursue them further. Yes, speeds might be quite faster with docker-sync for example, but CPU load goes through the roof as well. NTFS is even faster, but it requires having a stateful osx setup, which is just plain bad idea.

In the end, we settled for a boot2docker image running on a VirtualBox VM locally, and rewired to local docker instance via docker-machine env variables. From the perspective of the developer, everything is exactly the same as before (except the speed which is faster than all methods above), and since we didn't have to change anything in our docker setup (as it is in fact running on a linux vm) we kept the dev prod parity.

There is two hiccups left now.

It is still slow and hard on CPU. Its waaay better than D4M, but compared to a simple C2D ubuntu PC, my i7 MBP is dying and roaring half the time, and pageloads as well as build times are still quite a bit slower than linux native.

Every time you want to use docker for our product, you have to run the following script in the terminal window, as it attached docker-machine environment variables to that terminal window. Its not really a problem, just an annoyance.

P.S. Also, i've read above all kinds of crap about vendor folders. Listen. Don't even try to sync them. Trust me. Just keep separate copies and get quite a lot of performance gain for it. Its worth the tradeoff, and it is very simple to implement into the deployment shell script process. Since there is no real way to move composer without editing symfony, and there is no way at all to move node_modules, we use a simple trick of headless mount volumes as seen below:

Thanks for all the effort into this post! appreciate it :)

Thanks very much for this, it still works fine with macOS Big Sur and for my particular task at the moment (lots of write operations of small files) it speeds things up by about 30-40% compared to a regular mounted volume.

The only thing I needed to modify was the user ID for the NFS exports file. Mine happens to be 502, rather than 501 (because the account I'm using was the second one to be created on the machine).