Since the day I received a pre-production Raspberry Pi Compute Module 4 and IO Board, I've been testing a variety of PCI Express cards with the Pi, and documenting everything I've learned.

The first card I tested after completing my initial review was the IO Crest 4-port SATA card pictured with my homegrown Pi NAS setup below:

But it's been a long time testing, as I wanted to get a feel for how the Raspberry Pi handled a variety of storage situations, including single hard drives and SSD and RAID arrays built with mdadm.

I also wanted to measure thermal performance and energy efficiency, since the end goal is to build a compact Raspberry-Pi based NAS that is competitive with any other budget NAS on the market.

Besides this GitHub issue, I documented everything I learned in the video embedded below:

The rest of this blog post will go through some of the details for setup, but I don't have the space in this post to compile all my learnings here—check out the linked issue and video for that!

Getting the SATA card working with the Pi

Raspberry Pi OS (and indeed, any OS optimized for the Pi currently, like Ubuntu Server for Pi) doesn't include all the standard drivers and kernel modules you might be used to having available on a typical Linux distribution.

And the SATA kernel modules are not included by default, which means the first step in using a PCIe card like the IO Crest (which has a Marvell 9215 chip—which is supported in the kernel) is to compile (or cross-compile, in my case) the kernel with CONFIG_ATA and CONFIG_SATA_AHCI enabled.

I have full directions for recompiling the kernel with SATA support on the Pi itself, too!

Once that's done, you should be able to see any drives attached to the card after boot using lsblk, for example:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 1 223.6G 0 disk

sdb 8:16 1 223.6G 0 disk

mmcblk0 179:0 0 29.8G 0 disk

├─mmcblk0p1 179:1 0 256M 0 part /boot

└─mmcblk0p2 179:2 0 29.6G 0 part /

nvme0n1 259:0 0 232.9G 0 disk

(Wait... how is there also an NVMe drive there?! Well, I'm also testing some PCI multi-port switches with the Pi—follow that issue for progress.)

Partition the drives with fdisk

For each of the drives that were recognized, if you want to use it in a RAID array (which I do), you should add a partition. Technically it's not required to partition before creating the array... but there are a couple small reasons it seems safer that way.

So for each of my devices (sda through sdd), I ran fdisk to create one primary partition:

$ sudo fdisk /dev/sda

n # create new partition

p # primary (default option)

1 # partition 1 (default option)

2048 # First sector (default option)

468862127 # Last sector (default option)

w # write new partition table

There are ways you can script fdisk to apply a given layout to multiple drives at the same time, but with just four drives, it's quick enough to go into fdisk, then press n, then press 'enter' for each of the defaults, then w to write it, and q to quit.

Create a RAID device using mdadm

At this point, we have four independent disks, each with one partition spanning the whole volume. Using Linux's Multiple Device admin tool (mdadm), we can put these drives together in any common RAID arrangement.

I'm going to create a RAID 10 array for my own use—you can check out the associated video linked above for the reasons why I chose RAID 10 instead of something else.

# Install mdadm.

sudo apt install -y mdadm

# Create a RAID 10 array using four drives.

sudo mdadm --create --verbose /dev/md0 --level=10 --raid-devices=4 /dev/sd[a-d]1

# Create a mount point for the new RAID device.

sudo mkdir -p /mnt/raid10

# Format the RAID device.

sudo mkfs.ext4 /dev/md0

# Mount the RAID device.

sudo mount /dev/md0 /mnt/raid10

Troubleshooting mdadm issues

I ran into a few different issues when formatting different sets of disks. For example, when I was trying to format four HDDs the first time, I got:

mdadm: super1.x cannot open /dev/sdd1: Device or resource busy

mdadm: /dev/sdd1 is not suitable for this array.

mdadm: create aborted

And further, I would get:

mdadm: RUN_ARRAY failed: Unknown error 524

And the solution I found in this StackOverflow question was to run:

echo 1 > /sys/module/raid0/parameters/default_layout

I also ran into the message Device or resource busy when I tried formatting four SSDs, and it would always be a different device that was listed as the one being busy. It looked like a race condition of some sort, and after some Googling, I found out that's exactly what it was! The post mdadm: device or resource busy had the solution—disable udev when creating the volume, for example:

$ sudo udevadm control --stop-exec-queue

$ sudo mdadm --create ...

$ sudo udevadm control --start-exec-queue

You may also want to watch the progress and status of your RAID array while it is being initialized or at any given time, and there are two things you should monitor:

- Get the detailed status of an MD device:

sudo mdadm --detail /dev/md0 - Get the current status of MD:

cat /proc/mdstat

And if all else fails, resort to Google :)

Making the MD device persistent after boots

To make sure mdadm automatically configures the RAID array on boot, persist the configuration into the /etc/mdadm/mdadm.conf file:

# Configure mdadm to start the RAID at boot:

sudo mdadm --detail --scan | sudo tee -a /etc/mdadm/mdadm.conf

And to make sure the filesystem is mounted at boot, add the following line to the bottom of your /etc/fstab file:

# Add the following line to the bottom of /etc/fstab:

/dev/md0 /mnt/raid1/ ext4 defaults,noatime 0 1

Reset the disks after finishing mdadm testing

One other thing I had to do a number of times during my testing was delete and re-create the array, which is not too difficult:

# Unmount the array.

sudo umount /mnt/raid10

# Stop the device.

sudo mdadm --stop /dev/md0

# Zero the superblock on all the members of the array.

sudo mdadm --zero-superblock /dev/sd[a-d]1

# Remove the device.

sudo mdadm --remove /dev/md0

Then also make sure to remove any entries added to your /etc/fstab or /etc/mdadm/mdadm.conf files, since those would cause failures during startup!

Benchmarks

The first thing I wanted to test was whether a SATA drive—in this case, a Kingston SATA 3 SSD—would run faster connected directly through a SATA controller than it ran connected through a USB 3.0 controller and a UASP-enabled USB 3.0 to SATA enclosure.

As you can see, connected directly via SATA, the SSD can give noticeably better performance on all metrics, especially for small file random IO, which is important for many use cases.

Connected through USB 3.0, a SATA SSD is no slouch, but if you want the best possible performance on the Pi, using direct NVMe or SATA SSD storage is the best option.

Next I wanted to benchmark a single WD Green 500GB hard drive. I bought this model because it is pretty average in terms of performance, but mostly because it was cheap to buy four of them! To keep things fair, since it couldn't hold a candle to even a cheap SSD like the Kingston, I benchmarked it against my favorite microSD card for the Pi, the Samsung EVO+:

While the hard drive does put through decent synchronous numbers (it has more bandwidth available over PCIe than the microSD card gets), it gets obliterated by the itsy-bitsy microSD card on random IO!

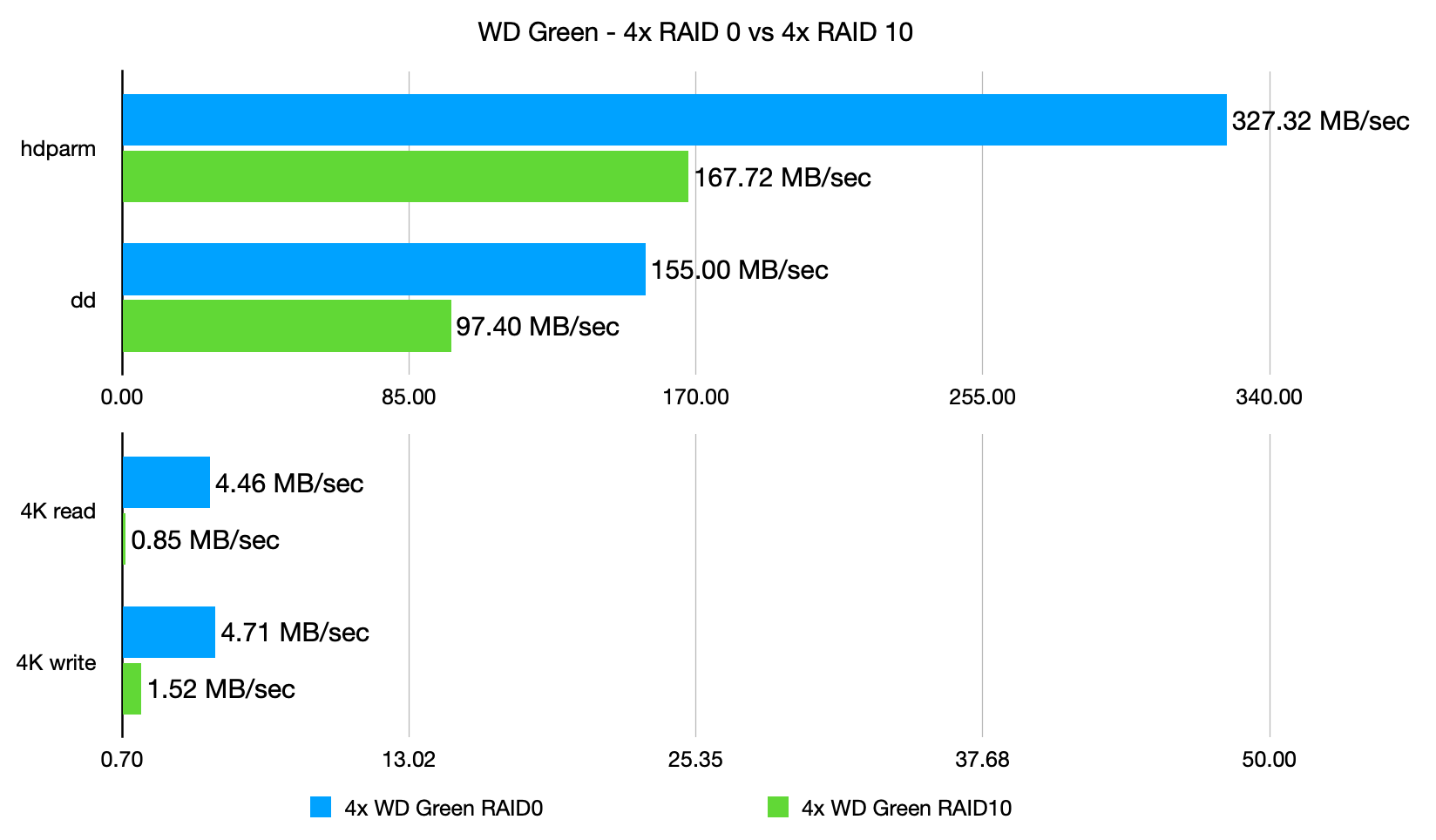

But putting slower hard drives into RAID can give better performance, so I next tested all four WD Green drives in RAID 0 and RAID 10:

And, as you'd expect, RAID 0 basically pools all the drives' performance metrics together, to make for an array that finally competes with the tiny microSD card for 4K performance, while also besting the Kingston SSD for synchronous file copies.

RAID 10 backs off that performance a bit, but it's still respectable and offers a marked improvement over a single drive.

But I decided to go all out (well, at least within a < $100 budget) and buy three more Kingston SSDs to test them in the same RAID configurations:

And it was a little surprising—since the Raspberry Pi's PCI Express 1x 2.0 lane only offers around 5 Gbps theoretical bandwidth, the maximum real-world throughput you could get no matter how many SSDs you add is around 330 MB/sec.

So there are other IO pressures that the Pi reaches that make RAID for SATA SSDs less of a performance option than for spinning hard drives. In some of my testing, I noticed what looked like queueing of network packets as the Pi had to move network traffic to the RAID array disks, and I'm guessing the Pi's SoC is just not built to pump through hundreds of MB of traffic indefinitely.

Speaking of network traffic, the last test I did was to install and configure both Samba and NFS (see Samba and NFS installation guides in this issue), to test which one offered the best performance for network file copies:

It looks like NFS holds the crown on the Pi, though if you use Windows or Android/iOS primarily, you might see slightly different results or have a harder time getting NFS going than Samba.

Conclusion

I think the Compute Module 4, with it's built-in Gigabit networking and ability to use one or more PCI Express cards, is the first Raspberry Pi that I would consider 'good' for running a reliable and performant NAS.

In my case, it's already faster than the old Mac mini I have been using as a NAS for years, which has only USB 2.0 ports, limiting my file copies over the network to ~35 MB/sec!

But I would definitely like someone to design a nice case that holds the Pi, a specialized (smaller) IO board, a PCIe SATA adapter, a fan, and four SATA drives—ideally designed in a nice, compact form factor!

Here are links (Amazon affiliate links—gotta pay the bills somehow!) to all the different products I used to build my SATA RAID array:

- IO Crest 4-port SATA PCI Express 1x card

- CableCreation low-profile SATA cable 5-pack

- CoolerGuys 12v 2A Molex power adapter (for drives)

- Cable Matters Molex to SATA power adapter

- StarTech 4x SATA power splitter

- Phanteks Stackable 3.5" HDD brackets

- Corsair Dual SSD 3.5" mounting bracket

- ICY DOCK ExpressCage 4-bay 2.5" hot-swap cage

- Kingston 120GB SATA SSD

- WD Green 500GB HDD

Comments

Wow. Just - wow. I work with storages for last ten years, maybe more, but what you do here is just excellent :). Thanks for sharing your work and good luck!

Great write up, was thinking about this as a project (at some stage), you've given me so much really useful information and many new tabs!

No link for the RPI4? Or it's power supply? What about a power switch and display / indicator for status?

I linked to those in my initial Pi Compute Module 4 Review post. Also, for now I don't use a power switch (though later on I might wire one up), nor do I have any kind of indicators (yet).

Using iSCSI (as opposed to NFS or SMB) can be much more efficient. (It's single client, so synchronization primitives are less important. Also, the client OS can do more caching.) Obviously, it's not an option when you need to share files — you need to unmount it from one client and mount it on another. But it's a good option if you just want to have external storage.

You probably get better efficiency if you use something like LVM and share a logical volume (rather than a file).

Did you find any solution to what you suspect is linux flushing to disk and starving the nic of io bandwidth, continuously tanking the network transfer speed? I'm pretty sure this is also what I'm running into with my laptop usb drive raidz nas that's limited by the 1x pcie lanes to the pch

Very thorough job. I’ve been wondering about using Pi for a Raid1 with 1 or 2 TB SSDs for storing high value data backups. I can imagine an enclosure... thanks for giving this idea some new strength.

How much ram does the Raspberry Pi Compute Module 4 have? Is it the 1GB, 4GB or 8GB version.

This one is the 4GB version, and running

free -hduring the benchmarking shows the Pi is filling up its RAM with filesystem cache data. So more RAM would definitely help make for more consistent transfers, but I don't think that's the only bottleneck, as copies would still start showing slowdowns after only 1-2 GB sometimes, even after a fresh reboot.Jeff... This is a pretty awesome article, man. I appreciate you a lot for doing this.

Really interesting article. One question I do have is if a PCIe X1 riser card would work on this?

Wanted to place a RPi 4 compute module and IO in a generic 1U rack mounted shallow depth server case and, naturally, that'd require the SATA card to be in a riser rather than standing upright.

Thanks for this amazing blog and video.

dmraid 10 is not exactly 1+0. It does striping and mirroring "combined" instead of one after the other. In your benchmarks did you try to play with raid10 layout options (near, far, ...); I was always wondering how the impact performance for spinning HDD versus SSD.

Amazing work! I’m currently working on designing a customized IO board for the CM4 for this exact purpose. Have you been able to test different SATA chipsets? Could I send you some to try?

For my board, I’m currently eying the JMB582 or JMB585 which are pci to 2 or 5 port SATA chips, respectively. It turns out that SATA chips are very difficult to get a hold of and JMicron is the only one that has been responsive.

I was leaning toward a 2 port NAS since 3.5 in hard drives are available in 18tb and soon 20tb variants. But a 5 port compact SATA SSD NAS would also be interesting. Plus, power requirements would be far lower. Thoughts on which you’d prefer?

You only have one PCIe lane to work with whether you have a regular rpi4 (the USB3 is attached to it) or you have an expansion card. The limiting factor in the performance for a NAS on RPI is always going to be the 1GB Ethernet port. So why do you think it matters if your drives are USB or SATA attached? - The (roughly) 5Gbits of PCIe are always going to be bottlenecked by the 1Gbit of Ethernet.

Two reasons:

Thank you for sharing your benchmark and all the steps.

I already have prepared a NAS with my raspi 4, and I was wondering what power supply are you using for feeding 4 x WD HDD.

I have seen the power supply 12V/2A you use for feeding 4 x Kingston SSD, but not the one for 4 x HDD. Is enough with the same power supply?

Thanks for answering.

It seemed to work in both cases, though I did my actual benchmarks for the HDDs while they were connected through a 600W power supply (overkill, I know!). But the HDDs on the label had the same minimal power requirement, so I don't see why the 12V/2A supply wouldn't work for them.

Startup current is usually way higher than current required during run, hence I'd expect the 12V/2A supply, even if it works initially, to be killed by overload within a few months. For a NAS that is expected to run for years I'd recommend a current output calculated as a factor of 2x-3x from all disk power requirements

Very true; I've since switched to doing all my testing using an actual PC PSU or bench supply, and I'm encouraging board makers to also use at least 12V/5A in their designs so they could make sure drives get enough power.

Hi,

I am not so experienced with pi, but why didn't you consider OMV ? ( I don't know if it supports raid, but there are Free NAS and others with GUI)

One thing you must have mentioned that a backup power, the files will be doomed if such thing happens.

Hi thank you for sharing this valuable information.

I'm looking for a new project and this is looking good.

I want to replace my old NAS with a low-energy but powerful replacement.

Did you look at the energy consumption of your setup?

Cheers from the Netherlands.

I covered that in the video here: https://youtu.be/oWev1THtA04?t=1096 — but basically it uses ~6W at idle (with drives on), and ~12W max under highest load writing files over the network.

Love your articles and videos! Something to think about next time...try using the BTRFS filesystem instead of mdadm. I abandoned md based raid over 10 years ago already and have been using BTRFS with great success on all my production servers in various raid configurations. BTRFS makes managing discs and filesystems and raid a pleasure. It is so incredibly easy to add new drives and dynamically expand your raid array while it's running. You can even change raid levels while the array is online. BTRFS also supports on the fly compression, which makes your storage go a little bit further than normal. Don't listen to the naysayers who say BTRFS is not production ready and not stable, that's a myth. I've never had data loss in over 10 years due to BTRFS, in fact it's saved my bacon more often than I can count. Major linux distros like RedHat and Suse are making it their default file system for a reason. I'd be really curious to see benchmarks on the hardware you're using with BTRFS compared to the conventional md raid array you used. Keep up the great work!

Wow just TWENTY bucks for a 120GB SSD ? Why would anybody boot a pi off a SD any more ? Any idea whether a pi4 can drive one of those disks without special power needed ?

I currently have a rpi4 2gb, with a usb3.0 to sata cable connected to a 1tb western digital blue SSD, the hard drive is powered directly from the Pi, and the pi is powered by a POE+ hat.

Thanks, if only there was a case available for this??

Hi Jeff,

I'm active in the openmediavault project and would like to link direct to "Benchmarks" here (all a long the lines of my GH issue #36)

Would you mind to create a deeplink to "Benchmarks"?

I have added a named anchor

#benchmarks, so you can link to https://www.jeffgeerling.com/blog/2020/building-fastest-raspberry-pi-nas-sata-raid#benchmarks now. Thanks!And I'm thinking I should go ahead and get OMV working on one of these NASes I'm building... :)

Thanks Jeff

added link in https://forum.openmediavault.org/index.php?thread/37285-rpi4-read-write… looking forward to your review of OMV :)

I like the visualization of your benchmarks any pointer show how you create them maybe in an automated approach?

Comparing performance results of individually build systems is attractive to builders (i.e. Gamers) haven't found such a site for NAS builders yet.

As a starting point I found

https://blog.yavilevich.com/2015/10/diskspdauto-automating-io-performan…

What is you view on this opportunity?

Happy New Year

Michael

Match for the envisioned enclosure?

On openmediavault forum the section "My NAS build" has good examples (i.e. case : CFI A7879 or A7979 mini-ITX case)

that would seem to satisfy 'case that holds the Pi, a specialized (smaller) IO board, a PCIe SATA adapter, a fan, and four SATA drives—ideally designed in a nice, compact form factor!'

Please note: I've not tested this myself!

First of all thanks for all good work. I've also used the Marvell 9215 and all your information was very helpfull! The one and only problem that I've got these messages about failing to load modules 'mraid' and 'crypto' :

Simple solution for that is to install packages "libblockdev-mdraid2" and "libblockdev-crypto2".

Jeff,

Could you publish the exact benchmark tests you are using please so that we can do like for like comparisons.

I'm using the disk/usb/microsd benchmark scripts from this repository: https://github.com/geerlingguy/raspberry-pi-dramble/tree/master/setup/benchmarks

I have this SATA card, https://www.amazon.co.uk/gp/product/B00AZ9T3OU/ref=ppx_yo_dt_b_asin_tit… it looks like its exactly the same but I'm not experiencing that heat issue that you have. I don't have the device to measure the temperature but though it gets hot, I can certainly hold my finger on it without discomfort.

Hi Jeff,

I've just bought the compute module 4 and dev board, just waiting for it to ship. My plan was to try and connect it via eSATA to a JBOD enclosure.

Is this something you would be interested in? I need to wait a couple of weeks before my Pi arrives, then I can start buying random eSATA cards, and enclosures, if you have any recommendations/suggestions that would be great :)

I've been testing a few HBA solutions... I have had to put that on pause momentarily though. Anyways, check out some options here: SATA Cards and Storage cards for Raspberry Pi Compute Module 4.

Hey Jeff,

I was wondering if this config can be used with OMV? In that case how should I install the driver?

I'd love to see how this pairs with something like OpenMediaVault

Hi Jeff,

Thank you so much for this amazing work.

If I may ask a question: this IO Crest card is suppose to be a non-RAID controller but you still manage to make a RAID array with it. So what is the difference to the most expensive RAID controller cards, such as StarTech.com PEXSAT34RH https://www.amazon.se/dp/B00BUC3N74/?coliid=I1XNXO6E8379N0&colid=OWWSMX…

Thanks,

André

Hardware RAID controllers offload the RAID tasks from the CPU to the card itself, which on a Pi can actually make the storage a bit faster. I'll be doing a video very soon talking more about hardware RAID!

Thanks Jeff, great educational work you do to the community. Cheers!

So, you are actually modifying the Pi OS kernel to get the SATA card to work? Do you have to worry about updates to Pi OS overwriting your modified portion of the kernel?

Or did the Raspberry Pi organization incorporate your changes formally for operational drivers for the IO Crest SATA card?

Regards,

Fun

OS upgrades that include kernel updates should NOT change the modules or drivers you have enabled. It should read your current kernel config and apply changes in addition to what you already have.

Thanks for sharing this - very complete and informative!

Hi Jeff,

Great video! Really got me into playing around with my pi as well. How are you powering the external drives (3.5")? I saw you use molex to sata adapters, do you have any suggestions for ac to 12v/5v molex adapter?

Another related question, if this were to be used as a nas which means 24/7 operation, do you trust the molex power source? In my research the old saying "molex to sata, lose all your data" came up wich had me second guessing. Would love to hear your thoughts.

Waiting for parts to start testing and then 3D printing a, very small, case for this :D

Is it possible or practical do set up raid on a regular raspberry pi 4 and open media vault, or other software?

OMV (and most other RAID-using software) won't allow RAID creation over a bunch of USB volumes.

FYI: https://github.com/dmaziuk/naspi

I managed to print a case. It's very rough at the moment but does get the job done.

https://photos.app.goo.gl/oZub1otJyzTP42mt5

https://photos.app.goo.gl/uWrdXK8asqWKCFHY9

I want to clean up the wiring so I can power both the drives and board from the power supply I'm using for the drives. Also need to add a hole for the WIFI antenna.

Oh, nice! Looking great already, and if you have anything posted to Thingiverse or a blog or anywhere, let me know and I'll share it with others who might want to build something like it!

Hi Jeff,

nice setup! I'm in process of prototyping a DIY NAS and I'm considering also the CM4. I also saw your video where you compared it aginst the Asustor.

I'm concerned about the power consumption for the CM4 and the SSD-caching. Those two factors would incline the balance towards the Asustor, but I don't need the fancy OS nor the apps (for that I already have dedicated server I'm very happy with).

Could you comment on those points?

Hi Jeff

Just wanted to draw your attention to the Argon Eon 4-bay NAS box, currently on KS :)

It's a neat project, for sure—I'm just less interested in Pi-4-based solutions since the CM4 can provide native SATA and better speeds now.

Hi Jeff, thanks for your great work! I just set up a very similar system to your proposal and keep running into these issues that seem related to the Marvell 9215 SATA chipset (Kernel.org Bugzilla id 43160), especially while running bonnie++.

Did you encounter something similar while testing and/or work around it somehow?

Cheers,

Sebastian

Great video. Don't have Mac or pi, but raid, backup, SSD vs HDD, Linux, NFS vs SMB info is terrific.

I believe Adata 650s are less expensive and maybe slightly faster than the Kingston's

With the IO Crest esata controller I have two questions. 1. Is the size limit for the drives reasonable for large storage? There are 16Tb drives out there right now, for example. 2. Does the controller have staggered startup features so the psu does notget overwhelmed? Thanks.

Pity you bought the Marvel 9215 card:

The Marvell 88SE9230 does 4 drive Hyperduo = Disk Tiering in its Max mode with write caching.

ie: You can put in 2 HDDs and have each tiered to to its own SSD.

https://www.marvell.com/content/dam/marvell/en/public-collateral/storag…

Reviews I have seen show the 88SE9230 to be slower than Intel's chipset, BUT their Hyperduo somehow manages to beat Intel's RST!

ie: Hyperduo may well give you 2 fast virtual drives (maybe they can be RAIDed?) at a much lower cost.

That would make an interesting blog post!

Hi, I'm building this project but I don't know whether to build a hard disk Seagate 12TB HDD Exos X14 SATA 6Gb/s 512e 7200RPM 3.5" Enterprise Hard Drive

Great work Jeff. These days, I am considering using Pi 5 (8GB RAM) to build a NAS with RAID 6 support (With the help of PCIe). In the market, Is there any RAID card that support Pi 5? I am really looking forward to hearing news from you.

Absolutely brilliant! Thank you so much for the article. I guess replacing Raspberry Pi with Orange Pi 5 Plus would be a significant improvement.