Don't pay $800 for Apple's 2TB SSD upgrade

Apple charges $800 to upgrade from the base model M4 Mac mini's 256 GB of internal storage to a more capacious 2 TB.

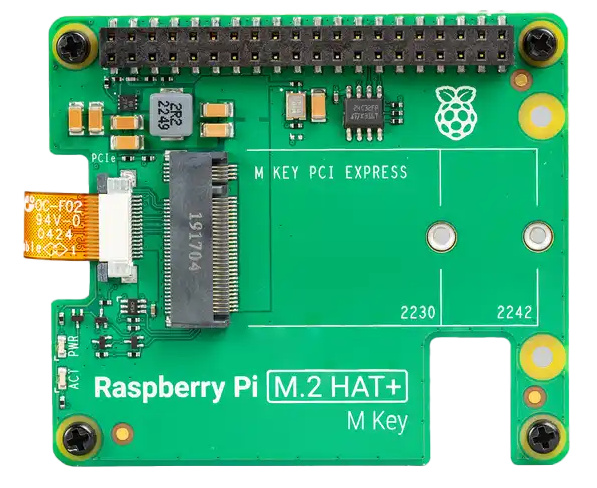

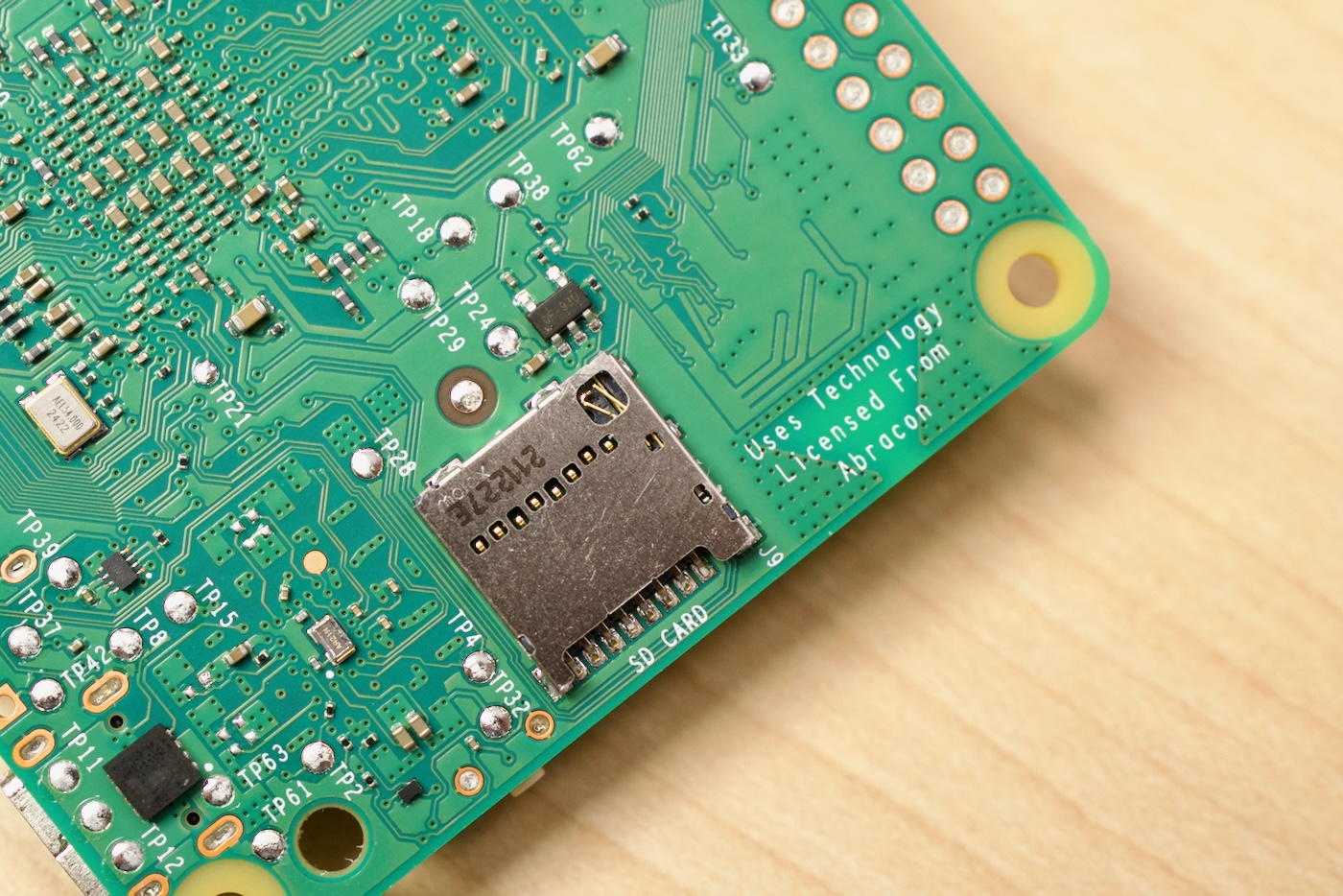

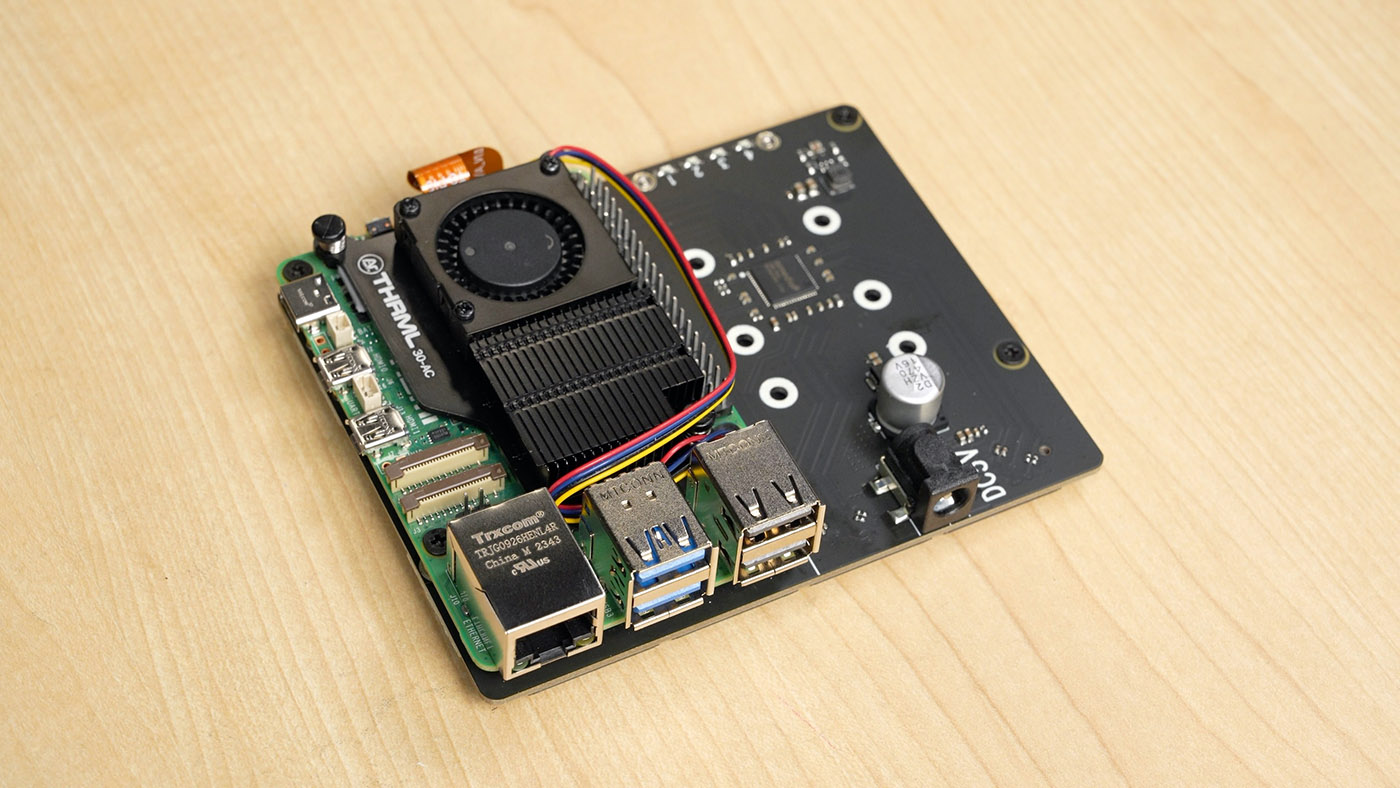

Pictured above is a photo of a standard 2230-size M.2 NVMe SSD (one made by Raspberry Pi, in this case), and Apple's proprietary not-M.2 drive, which has NAND flash chips on it, but no NVM Express controller, the 'brains' in a little chip that lets NVMe SSDs work universally across any computer with a standard M.2 PCIe slot.