That is indeed a puzzling question, brought about by Raspberry Pi's introduction of the newest Raspberry Pi 5 model, with 16 GB of RAM.

I spent a couple weeks testing the new Pi model, and found it does have its virtues. But being only $20 away from a complete GMKTec N100 Mini PC with 8GB of RAM, it's probably a step too far for most people.

For most, the 2 GB ($50) or 4 GB ($60) Pi 5 is a much better option. Or if you're truly budget conscious and want a well-supported SBC, the Pi 4 still exists, and starts at $35. Or a Pi Zero 2 W for $15.

And for stats nerds, the pricing model for Pi 5 follows this polynomial curve almost perfectly:

...very much unlike Apple's memory and storage pricing for the M4 Mac mini, which follows an equation that ranges from "excellent deal" to "exorbitant overcharge".

Performance

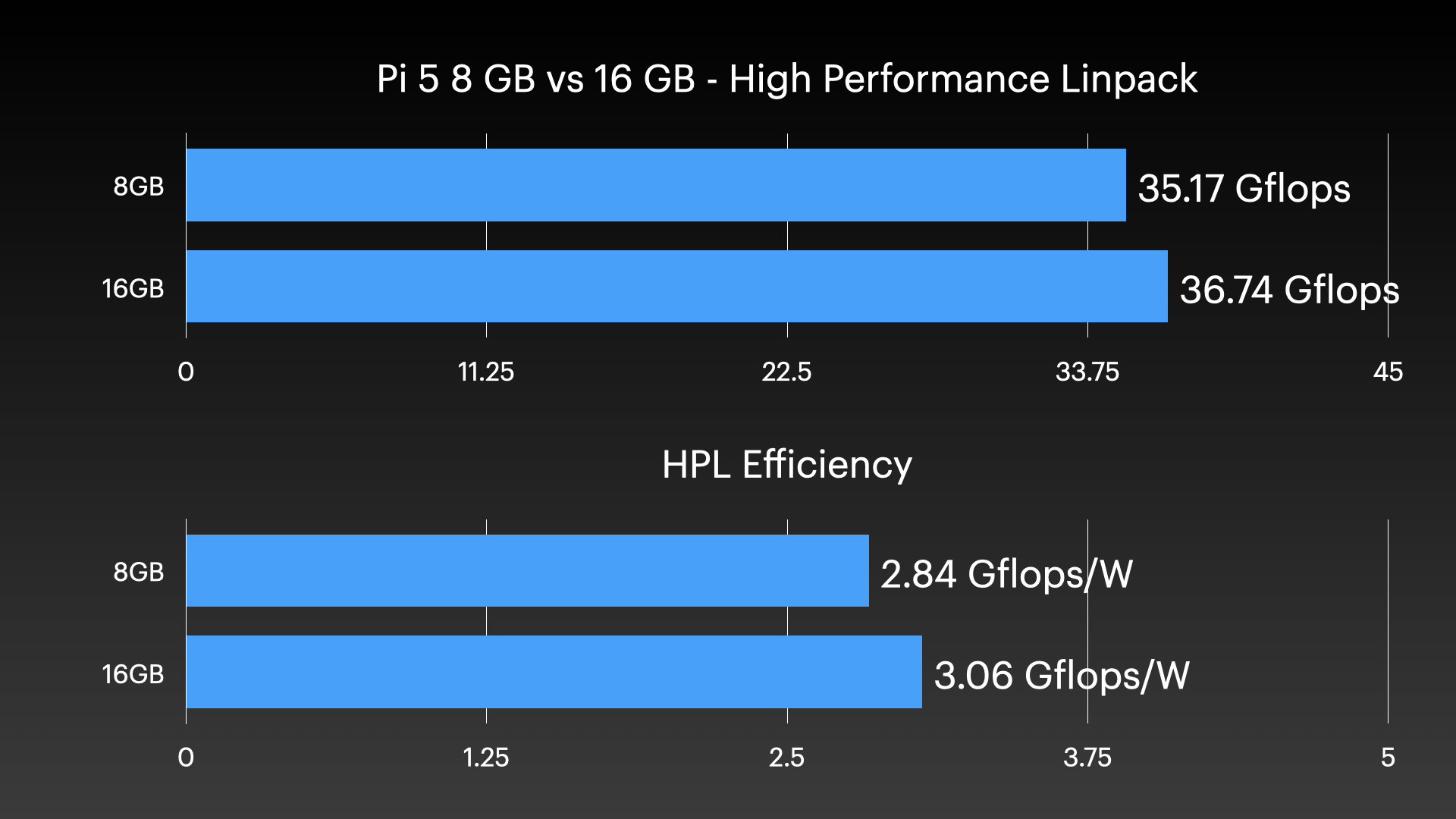

Before I get to the reasons why some people might consider spending $120 on a Pi 5, I ran a bunch of benchmarks, and one of the more pertinent results is HPL:

This compares the performance of the 8 GB Pi 5 against the new 16 GB model. For many benchmarks, the biggest difference may be caused by the 16 GB model having the newer, trimmer D0 stepping of the BCM2712. But for some, having more RAM helps, too.

Applications like ZFS can cache more files with more RAM, leading to lower latency and higher bandwidth file copies—in certain conditions.

For all my 16 GB Pi 5 benchmarks, see this follow-up comment on my Pi 5 sbc-reviews thread.

5 Reasons a 16 GB Pi 5 should exist

But I distilled my thoughts into a list of 5 reasons the 16 GB Pi 5 ought to exist:

- Keeping up with the Joneses: Everyone seems to be settling on 16 GB of RAM as the new laptop/desktop baseline—even Apple, a company notoriously stingy on RAM in its products! So having a high-end SBC with the same amount of RAM as a low-end desktop makes sense, if for no other reason than just to have it available.

- LLMs and 'AI': Love it or hate it, Large Language Models love RAM. The more, the merrier. The 8 GB Pi 5 can only handle up to an 8 billion parameter model, like

llama3.1:8b. The 16 GB model can run much larger models, likellama2:13b. Whether getting 1-2 tokens/s on such a large model on a Pi 5 is useful is up to you to decide. I posted my Ollama benchmarks results in this issue - Performance: I already discussed this above, but along with the latest SDRAM tuning the Pi engineers worked on, this Pi is now the fastest and most efficient, especially owing to the newer D0 chip revision.

- Capacity and Consolidation: With more RAM, you can run more apps, or more threads. For example, a Pi 5 with 4 GB of RAM could run one Drupal or Wordpress website comfortably. With 16 GB, you could conceivably run three or four websites with decent traffic, assuming you're not CPU-bound. You could also run more instances on the same Pi of Docker containers or pimox VMs.

- AAA Gaming: This is, of course, a stretch... but there are some modern AAA games that I had trouble with on my eGPU Pi 5 setup which ran out of system memory on the 8 GB Pi 5, causing thrashing and lockups. For example: Forza Horizon 4, which seemed to enjoy using about 8 GB of system RAM total (alongside the 1 GB or so required by the OS and Steam).

I have a full video covering the Pi 5 16GB, along with illustrations of some of the above points. You can watch it below:

Comments

By looking at benchmarks that do not access RAM at all (for example 'openssl speed -elapsed -evp aes-256-cbc') we can see that SoC revision does (close to) nothing: you now measured on your 16GB D0 board 1368167 (one time 1368096, the other 1368238), I measured on a 4GB board 1368001 back in March (one time 1367818, the other 1368184). That's a 0.01% 'improvement' or in other words: results variation :)

Talking about the comparison with the 8GB variant above: are these numbers also made with latest firmware from Jan 8th?

The 8 GB was on a Jan 6th revision, but according to the Pi engineer who told me about the update, it only changed timings on the D0 models (I think... could've misinterpreted it).

me

Same.

Linux is mini hobby for me not a full one. Pis are just a component of my real outcome based making.

So I like not learning the nuances of different OS and keeping electronic accessory compatibility simple.

The Linpack performance is higher because you have more RAM and can use larger matrix N. To be fair, use the same matrix size N on both. IMHO you will not see a significant difference in that case.

You mention pimox but it's only PVE7 which is nearing end of life.

I had great success on my pi5 with this ARM port of proxmox : https://github.com/jiangcuo/Proxmox-Port/wiki

The graph puzzles me, what x represents in the fit?

If it was the amount of the memory you would have received a linear fit of Price = 20 * GB +40 with a a perfect fit

Jeff,

Price is precisely linear, not polynomial. $5/GiB (price= $40 + $5 * xGiB)

The graph isn't spaced correctly on the x axis, which causes confusion.

If you require 16gb of ram, chances are you'll also require nvme storage. At that point I suspect you're better off with an Intel N100 unless you need the rpi IO. With much higher performance, the N100 honestly looks like the better deal here.

Regarding the RAM pricing graph: Not sure if I'm missing anything but isn't the pricing linear? You pay 10$ per 2GB increment (spacing on x axis is distorted).

Is the D0 coming to the smaller variants? And if so, how would one know if one was getting the D0 or not?

My take on it, as a maker and maintainer of FOSS, is that while *maybe* my end-users don't directly need such a machine to run the programs, I (or my CI farms) certainly benefit from having a beefier faster machine to build them. Even with same-ish CPU performance, more caching and a bigger RAM disk (tmpfs) for scratch workspaces allows for faster build/test turnaround, perhaps spinning up several scenarios in parallel, ultimately benefitting the users as they get tested features quicker.

As VMs/containers were mentioned, yes - such a Pi can also help me run more platforms for manual bug investigations for the popular platform (frankly, builds could be done on x86 cross hosts; however confirming issues and solutions, with USB of the Pi, endianness, or whatever, can not be dobe so virtually).

Maybe an official support for Windows on Arm is on the way for Pi5, where 16GB make sense. Or a Pi 500 "pro" with 16GB, M.2 SSD and PoE for enterprise market (with Windows 11 Pro). Can I dream?

I would note one major use for these boards is for armv7l building. (You just have to boot into the kernel8 kernels with the smaller page sizes since the default 2712 kernels don't support armv7l.)

We use multiple 8Gb RPI5 boards for armv7l GitHub action runners to build software for the armv7l variant of Chromebrew.

When needed, it may make sense for us to use the 16Gb boards.

Me ,

Your arguments might be right but that chart is totally misleading. The price on y is on a linear axis but the memory on x is on a logarithmic axis (2 4 8 16 evenly spaced)

Surely this is being built for some high volume enterprise customers who asked for it right? And then consumers get access to it because why not.

That may make the most sense, as the BCM2712 isn't quite fit for so much memory for most applications.

Jeff, I really enjoyed this last video and your reasoned data driven takes. You gave real world examples of why you might benefit from the 16GB version, and why a lower RAM version might be just as great for different use cases. Currently, I'm on the "I want a RPi5", and at first thought only the highest GB version would be the best, but honestly for what I would like to use it for, running containerized apps on my homelab with at most 4 users, I probably can pass on the 16GB version and save some cash for the power adapter or a cool hat for an SSD. I'm the guy who uses all the computers that others throw away to run in my homelab. All the RPi's that I have came into our home as gifts to myself or my son. Currently, I have two RPi 1's with 512MB of RAM running everyday. One RPi1 is a Bastion Host Box running tailscale as an exit node at the work office giving me access to my linux computers over there from home and back to home connected to my Pfsense Box (14 year old i3 Dell Optiplex) running tailscale as an exit node. The second RPi 1 is running tailscale allowing me to connect to it across the state to act as a backup node running borg backup, giving me my 3 source backup that is also offsite. 12 year old Asus tower and another Dell Optiplex serve as the main app server, and backup storage server in the homelab. My son is using a RPi 4 as his Home Assistant Hub. This leaves currently an RPi 3 and RPi 3+ without jobs. My Linux workstations are all computers that were being thrown out, another Asus tower i3 with 8GB of RAM, a HP i7 with Optiumus Nvidia GPU 17" laptop with a broken screen and 16GB of RAM, and a Chromebook with hacked firmware and 2GB of RAM as my mobile "fun and vacation" computer. Your positive videos have me "wanting" the lastest in ARM tech, but also inspire me to continue getting the most out of those computers that at best would end up at an electronics recycler or at worst in a landfill. Thanks Jeff for your inspiration and well thought out videos and continuing to advocate for Free and Open Source Software.

Perfect curve fit:

Pi 5 price = 40+5M, where M is memory in GB.

I wonder how well it's going to fare in the future as stuff starts requiring more RAM over time compared to the 8GB model...

I wouldn't. But that's because I know that unless you're running Windows, you probably don't need that much (for lightweight use). My guess is that Raspberry are selling these because a lot of people don't know that in most cases 8GB of RAM should be plenty for these computers.

-A guy that owns a Chromebook with 4GB of RAM.

Lets see a 'why buy that pi5 at all' investigation please. Times have changed. There are better alternatives now for desktop users.

To me, spending $160 for a 16GB pi5 for the card alone makes zero sense compared to any N100 x86_64 box that would come all integrated already with case, power supply, etc. for less money.

https://www.amazon.com/Beelink-Intel-N100-Computer-Desktop-Display/dp/B… as one example.

I still think the 4GB pi4 is the pi sweet spot for non-desktop uses. Stable, low power, runs cool enough for passive. Plenty of horsepower for small home server needs.

anything about the compute module?

Yeah nah...

Locally these are selling for $215 AUD, for $229 I can buy a Trigkey N100, 16GB RAM and 500GB NVMe (and a spare NVMe slot) with integrated power supply and case included.

The only reasonable use case I see for 16GB is as an ultra low power Proxmox host and even then the value is questionable as you'd also want to pair it with an NVMe hat and a decently sized NVMe drive, add a suitable size 5A power supply and you've probably added another $100-150, exceeding the cost of mini PC's that outperform a Pi 5.

You ask why one would spend $120 on a 16GB Pi rather than buying the GMKTec mini PC at $209 (for 16GB). I think the prices speak for themselves: you save $89. If your only criteria are for a very small computer with 16GB RAM the Pi is a no-brainer.

no, you don't really save anything. You'll still need to get a SSD, case, fan and/or active cooling HAT, power supply for the pi5. So the price is very close to a $175 or so equivalently spec'd Beelink N100

The N100 box doesn't require that you purchase a case, power supply, CPU cooler, SSD hat, and SSD. There are not a lot of applications that require 16GB but don't require an SSD (MicroSD cards are slow, write-seldom devices).

Your example N100 mini PC is at the pricier end of the scale. I just did a search on Amazon and found multiple N100, 16GB systems, with SSDs, for about $150 -- $30 more than the RPi 5 16GB bare board. For example, one is described as "N100 Mini Desktop Computer, Tiny Small PC, 16GB LPDDR5 4800MHz 512GB M.2 SSD, Windows 11, 3xUSB 3.1, 3xHDMI, 4K UHD Triple Display, 1G LAN, Mini PC Server for Office/Business/Home/Education." That's underselling it because it has two gigabit Ethernet ports, not just one.

For RPi5 PCIe is the new addition to the 40pin header. The RPi5 is small enough to keep close to my FPGA board and is a great bridge to grab data over ethernet, hold it in DRAM and move it to FPGA over PCIe. I have been keeping my data files relatively small but now I could double their size. My setup is working well with a 8GB RPi5 but this gives me options for the future.

Zynthian, an open-source Linux based music platform (sampled sounds, software synthesizers, sequencing, effects) runs on the Pi5 so I’m sure the 16GB could come in handy for people wanting to load large sample-sets.

I won't be buying one, and I have owned multiple generations -- one through four -- of Raspberry Pi SBC.

I dropped off of the RPi bandwagon during the big COVID-19 first wave, during which Raspberry Pi prioritized deliveries to big corporations over retailers. They relied on hobbyists to develop hardware, software, and documentation that made the RPis into viable SBCs for incorporation into commercial products. Then they abandoned us. We had served our purpose and they didn't need us any longer.

During that time, various NUC-style PCs sporting Intel N100 CPUs became commonplace, all of which trounced the top-of-the-line Raspberry Pis in both performance and expandability. And they did so at a lower cost for comparable configurations, with most being available preconfigured with capacious SSDs and 8, 12, or 16GB of RAM. By the time you buy a Raspberry Pi 5 16GB SBC, a power supply, an SSD hat, the SSD to go onto the hat, and a hat-friendly case, you will have blown past the $150 price point that gets you a ready-to-run, 16GB, N100 NUC-style PC.

Where I had Pi servers, I now have NUC and 'stick' style PCs with N100 and modern generation Celeron CPUs. Most of my Pis, power supplies, and cables (including the hated Micro HDMI adapters) are relegated to a small storage bin. When I recently needed an SBC for a standalone Pi-hole server, I bought an Orange Pi Zero 3 instead of pressing one of the RPis into service.

I'd love to see a test of Windows 11 running on a 16gb Pi 5 if it's even possible. I'm not sure if rpi5-uefi is working with the d0 chips at all.

Hi,

after many unsuccessful attempts (and research, watching installation videos, ...) to install Windows 11 on my system (latest Pi5 with 16GB!!!), I found a video on YouTube that gave me an explanation, which I unfortunately cannot understand maybe due to a lack of know-how ...

In that video "Raspberry Pi 5 with 16 GB RAM and a new SoC is here! How it performs? Is it better than old one?" a colleague who I consider to be quite competent, explains that the chipset on my NEW Raspbarry Pi 5 with 16 GB RAM has probably changed, among other things, and therefore an installation of Windows 11 is not possible due to the installation environment for Windows 11 on RAspberry PI 5 no longer being continued from March 2024.

As I said before, I lack the know-how to check this and so said goodbye to my dream of an RPi5 16GB with Win 11. ;-(

On this Untitled Linux Show, the TWiT panel noted "education" is a great use for the larger capacity. I heartily agree.

Back in 2013, Wolfram Research ported Mathematica to the Pi. It was painfully slow. Today, a 16GB Pi 5 is a monster machine; it should be quite comfortable performing any sort of computational visualization a student would want to do. Wolfram's gift becomes more "generous" ever year, because they risk cannibalizing paid versions on other platforms. I for one am grateful for their generosity -- and it is great to "train" students to use their platform for High School, and University, and their eventual startup companies. It's all good -- especially because those students are probably better thinkers for structuring their work inside of Wolfram's APIs. One other benefit: if a student turned in their homework composed in a Wolfram Language Notebook, I bet it would explode their teachers' heads.

Although it does have uses, you do wonder why they only upgrade the Pi 5 for increased RAM. Why not the Pi 500?

My use case is mostly building/testing s/w natively on ARM, YMMV. For this sort of thing both the rpi5 and the N100 suffer from having just 4 CPU cores so extra RAM is a bit wasted.

A 16GiB Rock 5B costs about the same on Alixpress, but has twice as many cores, faster ethernet, doesn't need a hat for NVME and runs comfortable under load with passive cooling. I find the vendor Armbian build works quite well, but you can run any modern distro supporting UEFI boot with https://github.com/edk2-porting/edk2-rk3588. Not every kernel driver is fully mainlined yet, but that's not better on the rpi5 side and IMHO Collabora have been doing a stellar job so far.

I'm considering buying one, specially because I can pair it with an M.2 SSD.

I intend to run a Modded Minecraft Server of it with some extra smaller services on the side.

Half the RAM will be dedicated to Minecraft. And because it's a very single core service all other cores would essentially be idle if not for the extra services.

Some might but I won't. I already got NanoPC-T6. Works quite well and yeah I got that with 16GB Ram and 64GB eMMC. Added a Nvme SSD with Wi-Fi 6E module. Neat little router & web server.