Everything I know about Kubernetes I learned from a cluster of Raspberry Pis

I realized I haven't posted about my DrupalCon Seattle 2019 session titled Everything I know about Kubernetes I learned from a cluster of Raspberry Pis, so I thought I'd remedy that. First, here's a video of the recorded session:

.embed-container { position: relative; padding-bottom: 56.25%; height: 0; overflow: hidden; max-width: 100%; } .embed-container iframe, .embed-container object, .embed-container embed { position: absolute; top: 0; left: 0; width: 100%; height: 100%; }

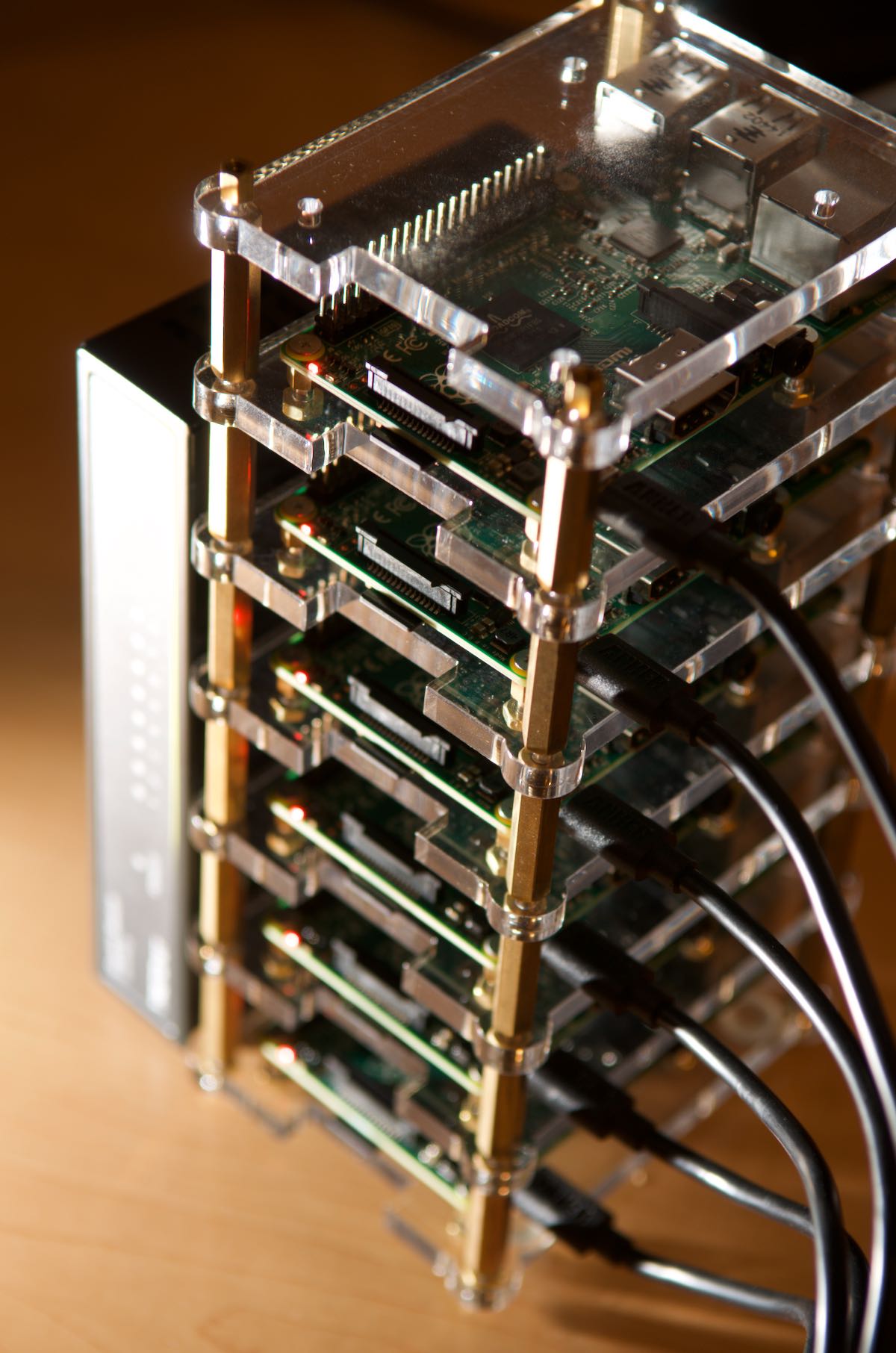

The original Pi Dramble 6-node cluster, running the LAMP stack.