[Update 2015-08-25: I reran some of the tests using two different settings in VirtualBox. First, I explicitly set KVM as the paravirtualization mode (it was saved as 'Legacy' by default, due to a bug in VirtualBox 5.0.0), which showed impressive performance improvements, making VirtualBox perform 1.5-2x faster, and bringing some benchmarks to a dead heat with VMware Fusion. I also set the virtual network card to use 'virtio' instead of emulating an Intel PRO/1000 MT card, but this made little difference in raw network throughput or any other benchmarks.]

My Mac spends the majority of the day running at between one and a dozen VMs. I do all my development (besides iOS or Mac dev) running code inside VMs, and for many years I used VirtualBox, a free virtualization tool, along with Vagrant and Ansible, to build and manage all these VMs.

Since I use build and rebuild dozens of VMs per day, and maintain a popular Vagrant configuration for Drupal development (Drupal VM), as well as dozens of other VMs (like Ansible Vagrant Examples), I am highly motivated to find the fastest and most reliable virtualization software for local development. I switched from VirtualBox to VMware Fusion (which requires a for-pay plugin) a year ago, as a few benchmarks I ran at the time showed VMware was 10-30% faster.

Since VirtualBox 5.0 was released earlier this year, I decided to re-evaluate the two VM solutions for local web development (specifically, LAMP/LEMP-based Drupal development, but most of these benchmarks apply to any dev workflow).

I benchmarked the raw performance bits (CPU, memory, disk access) as well as some 'full stack' scenarios (load testing and per-page load performance for some CMS-driven websites). I'll present each benchmark, some initial conclusions based on the result, and the methodology I used for each benchmark.

The key question I wanted to answer: Is purchasing VMware Fusion and the required Vagrant plugin ($140 total!) worth it, or is VirtualBox 5.0 good enough?

Baseline Performance: Memory and CPU

I wanted to make sure VirtualBox and VMWare could both do basic operations (like copying memory and performing raw number crunching in the CPU) at similar rates; both should pass through as much of this performance as possible to the underlying system, so numbers should be similar:

VMware and VirtualBox are neck-in-neck when it comes to raw memory and CPU performance, and that's to be expected these days, as both solutions (as well as most other virtualization solutions) are able to use features in modern Intel processors and modern chipsets (like those in my MacBook Air) to their fullest potential.

CPU or RAM-heavy workloads should perform similarly, though VMware Fusion has a slight edge.

Methodology - CPU/RAM

I used sysbench for the CPU benchmark, with the command sysbench --test=cpu --cpu-max-prime=20000 --num-threads=2 run.

I used Memory Bandwidth Benchmark (mbw) for the RAM benchmark, with the command mbw -n 2 256 | grep AVG, and I used the MEMCPY result as a proxy for general RAM performance.

Baseline Performance: Networking

More bandwidth is always better, though most development work doesn't rely on a ton of bandwidth being available. A few hundred megabits should serve web projects in a local environment quickly.

This is one of the few tests in which VMware really took VirtualBox to the cleaners. It makes some sense, as VMware (the company) spends a lot of time optimizing VM-to-VM and VM-to-network-interface throughput since their products are more often used in production environments where bandwidth matters a lot, whereas VirtualBox is much more commonly used for single-user or single-machine purposes.

Having 40% more bandwidth available means VMware should be able to perform certain tasks, like moving files between host/VM, or your network connection (if it's fast enough) and the VM, or serving hundreds or thousands of concurrent requests, with much more celerity than VirtualBox—and we'll see proof of this fact with a Varnish load test, later in the post.

Methodology - Networking

To measure raw virtual network interface bandwidth, I used iperf, and set the VM as the server (iperf -s), then connected to it and ran the benchmark from my host machine (iperf -c drupalvm.dev). iperf is an excellent tool for measuring raw bandwidth, as no non-interface I/O operations are performed. Tests such as file copies can have irregular results due to filesystem performance bottlenecks.

Disk Access and Shared/Synced Folders

One of the largest performance differentiators—and one of the most difficult components to measure—is filesystem performance. Virtual Machines use virtual filesystems, or connect to folders on the host system via some sort of mounted share, to provide a filesystem the guest OS uses.

Filesystem I/O perfomance is impossible to measure simply and universally, because every use case (e.g. media streaming, small file reads, small file writes, or database access patterns) benefits from different types of file read/write performance.

Since most filesystems (and even the slowest of slow microSD cards) are fast enough for large file operations (reading or writing large files in large chunks), I decided to benchmark one of the most brutal metrics of file I/O, 4k random read/write performance. For many web applications and databases, common access patterns either require hundreds or thousands of small file reads, or many concurrent small write operations, so this is a decent proxy of how a filesystem will perform under the most severe load (e.g. reading an entire PHP application's files from disk, when opcaches are empty, or rebuilding key-value caches in a database table).

I measured 4k random reads and writes across three different VM scenarios: first, using the VM's native share mechanism (or 'synced folder' in Vagrant parlance), second, using NFS, a common and robust network share mechanism that's easy to use with Vagrant, nad third, reading and writing directly to the native VM filesystem:

The results above, as with all other benchmarks in this post, were repeated at least four times, with the first result set discarded. Even then, the standard deviation on these benchmarks was typically 5-10%, and the benchmarks were wildly different depending on the exact benchmark I used.

I was able to reproduce the strange I/O performance numbers in Mitchell Hashimoto's 2014 post when I didn't use direct filesystem access to do reads and writes; certain benchmarks suggest the VM filesystem is capable of over 1 GB/sec of random 4K reads and writes! Speaking of which, running the same benchmarks on my MacBook Air's internal SSD showed maximum performance of 1891 MB/s read, and 389 MB/s write.

Passing the -I option to the iozone benchmarking tool makes sure the tests bypass the VM's disk caching mechanisms that masks the actual filesystem performance. Unfortunately, this parameter (which uses O_DIRECT filesystem access) doesn't work with native VM shares, so those numbers may be a bit inflated over real-world performance.

The key takeaway? No matter the filesystem you use in a VM, raw file access is an order of magnitude slower than native host I/O if you have a fast SSD. Luckily, the raw performance isn't horrendous (as long as you're not copying millions of tiny files!), and common development access patterns help filesystem and other caches speed up file operations.

Methodology - Disk Access

I used iozone to measure disk access, using the command iozone -I -e -a -s 64M -r 4k -i 0 -i 2 [-f /path/to/file]. I also repeated the tests numerous times with different -s values ranging from 128M to 1024M, but the performance numbers were similar with any value.

If you're interested in diving deeper into filesystem benchmarking, iozone's default set of tests are much broader and applicable across a very wide range of use cases (besides typical LAMP/LEMP web development).

Full Stack - Drupal 7 and Drupal 8

When it comes down to it, the most meaningful benchmark is a 'full stack' benchmark, which tests the application I'm developing. In my case, I am normally working on Drupal-based websites, so I wanted to test both Drupal 8 and Drupal 7 (the current stable release) in two scenarios—a clean install of Drupal 8 (with nothing extra added), and a fairly heavy Drupal 7 site, to mirror some of the more complicated sites I have to work with.

First, here's a comparison of 'requests per second' with VirtualBox and VMware. Higher numbers are better, and this test is a decent proxy for how fast the VM is rendering specific pages, as well as how many requests the full stack/server can serve in a short period of time:

The first two benchmarks are very close. When your application is mostly CPU-and-RAM-constrained (Drupal 8 is running almost entirely out of memory using PHP's opcache and MySQL caches), both virtualization apps are about the same, with a very slight edge going to VMware Fusion.

The third graph is more interesting, as it shows a large gap—VMware can serve up 43% more traffic than VirtualBox. When you compare this graph with the raw network throughput graph above, it's obvious VMware Fusion's network bandwidth is the reason it can almost double the requests/sec for a network-constrained benchmark like Varnish capacity.

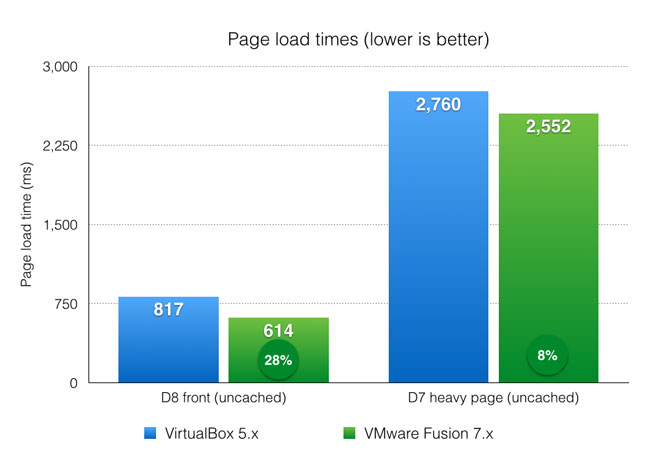

Developing a site with frequently-changing code requires more disk I/O, since the opcache has to be rebuilt from disk, so I tested raw page load times with a fresh PHP thread:

For this test, I restarted Apache entirely between each page request, which wiped out PHP's opcache, causing all the PHP files to be read from the disk. These benchmarks were run using an NFS share, so the main performance increase here (over the load test in the previous benchmark) comes from VMware's slightly faster NFS shared filesystem performance.

In real world usage, there's a perceptible performance difference between VirtualBox and VMware Fusion, and these benchmarks confirm it.

Many people decide to use native synced folders because file permissions and setup can often be simpler, so I wanted to see how much not using NFS affects these numbers:

As it turns out, NFS has a lot to offer in terms of performance for apps running in a shared folder. Another interesting discovery: VMware's native shared folder performs nearly as good as the ideal scenario in VirtualBox (running the codebase on an NFS mount).

I still highly recommend using NFS instead of native shared folders if you're sharing more than a few files between host and guest.

Methodology - Full Stack Performance

I used ab, wrk, and curl to run performance benchmarks and simple load tests:

- Drupal anonymous cached page load:

wrk -d 30 -c 2 http://drupalvm.dev/ - Drupal authenticated page load:

ab -n 500 -c 2 -C "SESS:COOKIE" http://drupalvm.dev/(used the uid 1 user session cookie) - Varnish anonymous proxied page load:

wrk -d 30 -c 2 http://drupalvm.dev:81/(a cache lifetime value of '15 minutes' was set on the performance configuration page) - Drupal 8 front page uncached:

time curl --silent http://drupalvm.dev/ > /dev/null, run once after clicking 'Clear all caches' on theadmin/config/development/performancepage, averaged over six runs) - Large Drupal 7 site views/panels page request:

time curl --silent http://local.example.com/path > /dev/null(run once after clicking 'Clear all caches' on the `admin/config/development/performance` page, averaged over six runs)

Drupal 8 tests were run with a standard profile install of a Drupal 8 site (ca. beta 12) on Drupal VM 2.0.0, and Drupal 7 tests were run using a very large scale Drupal codebase, with over 150 modules.

Summary

I hope these benchmarks help you to decide if VMware Fusion is right for your Vagrant-based development workflow. If you use synced folders a lot and need as much bandwidth as possible, choosing VMware is a no-brainer. If you don't, then VirtualBox is likely 'fast enough' for your development workflow.

It's great to have multiple great choices for VM providers for local development—and in this case, the open source option holds its own against the heavyweight proprietary virtualization app!

Methodology - All Tests

Since I detest when people post benchmarks but don't describe the system under test and all their reasons behind testing things certain ways, I thought I'd explicitly outline everything here, so someone else with the time and materials could replicate all my test results verbatim.

- I ran all benchmarks four times (with the exception of some of the disk benchmarks, which I ran six times for better coverage of random I/O variance), discarded the first result, and averaged the remaining results.

- All tests were run using an unmodified copy of Drupal VM version 2.0.0, with all the example configuration files (though all extra installations besides Varnish were removed), using the included Ubuntu 14.04 LTS minimal base box (which is built using this Packer configuration, the same for both VirtualBox and VMware Fusion).

- For full stack Drupal benchmarking for Varnish-cached pages, I logged into Drupal and set a minimum cache lifetime value of '15 minutes' on the performance configuration page, and for authenticated page loads, I used the session cookie for the logged in uid 1 user.

- All tests were run on my personal 11" Mid 2013 MacBook Air, with a 1.7 GHz Intel Core i7 processor, 8 GB of RAM, and a 256 GB internal SSD. The only other applications (besides headless VMs and Terminal) that were open and running during tests were Mac OS X Mail and Sublime Text 3 (in which I noted benchmark results.

- All tests were performed with my Mac disconnected entirely from the Internet (WiFi disabled, and no connection otherwise), to minimize any strange networking problems that could affect performance.

Comments

You might be calling me a scrooge after this one but I just don't see the value in the cost. Yes, it's faster but it's not like I'm waiting around for days...and it's $79 for the plugin with the additional cost of VMware Fusion for my Mac and VMWare workstation for my Linux PC.

I won't call you a scrooge—VirtualBox is getting better and better, and on most modern workstations, there are only a few situations where you'll feel anything resembling a true slowdown when developing with VB. Definitely avoid native shared folders, though!

Years ago, when a Core 2 Duo was a modern chip, I tried both VMWare Fusion and VirtualBox. Empirically, I didn't notice a difference in performance, but I did't benchmark it either. What I did notice is VirtualBox consumed slightly more CPU for an idle VM, which translated to shorter battery life.

I did some rough benchmarks on performance between Fusion and VirtualBox 4 about six months ago, and also found a very small difference. This was with https://github.com/Lullabot/trusty32-lamp testing lbzip2 decompressions and database imports, and the differences were within the standard deviation. Thanks for doing the deep dive and writing this all up!

I'd actually bought the Vagrant plugin because I kept having issues with the Virtualbox network adapter flaking out and requiring a host reboot. My hope was that VMWare would have better support and reliability. For Ubuntu hosts, there was an issue with the guest additions where they were completely broken for ~4 months. VMWare's poor handling of that drove me back to using Virtualbox as my default platform (which has been very stable for me over the past few releases)

https://communities.vmware.com/thread/502554

Were your Virtualbox tests done with the paravirtualization setting set to KVM? That should be the default for from-scratch VMs, but I've seen boxes end up with it being set to "legacy" which really hurts CPU performance.

https://github.com/blinkreaction/boot2docker-vagrant/pull/35

Looks like it was defaulting to Legacy for some reason—I'm using Packer and built the first VirtualBox 5.x box using 5.0.0, which didn't properly export the paravirtualization provider (see bug report: https://www.virtualbox.org/ticket/14390). So I'll be rebuilding the base box so it's default is correct (see: https://github.com/geerlingguy/packer-ubuntu-1404/issues/12).

Also, I did some extra benchmarks to compare 'Legacy' and 'KVM', and it does seem there's a significant gain when using KVM (as you pointed out!): https://github.com/geerlingguy/drupal-vm/issues/212 — so to 'stop the bleeding' (as it were), I've added a line explicitly setting the value to KVM in Drupal VM's Vagrantfile. But once it's fixed in the box itself, anyone using

geerlingguy/ubuntu1404(or any of my other base boxes) will get the benefit!I'll be updating the post with this and some updated networking benchmarks very soon.

I've been trying to overcome some very long (10s+) time to first byte issues using Vagrant. Does changing paravirtualization to KVM require destroying and rebuilding the VM?

It should only require a

vagrant reload, as long as you have the latest version of VirtualBox.I think your comparing VMware's desktop product with VirtualBox. Try comparing VMware ESXi to VirtualBox and see how you go :)

Yes; I'm using VMWare Desktop vs VirtualBox as for most developers, these are the two options they'd be using for Vagrant-based development workflows (or maybe Parallels Desktop). Other hypervisors/VM setups like ESXi are more geared towards server, and not desktop/individual developer, applications.

Does VMWare propagate file system events for mounted shares with the host? I'm consistently fighting Vbox because when I make changes in SublimeText, my development server doesn't reload anything because Vbox didn't propagate the file change to the VM.

you should test OpenGL/DirectX GPU performance, in my experience, VMWare's GPU drivers are over 30 times faster than VirtualBox's! with a Windows 7 hypervisor and Windows 7 guest, rolling Intel Core i7-6700 iGPU, DirectX/OpenGL games like Tibia, Knights Online, Flyff, runs a completely unplayable 1FPS in VirtualBox, and a comfy ¨30FPS-ish in VMWare (and yes, i had installed the VirtualBox hardware 3D driver, without that, many of the games wouldn't start at all, but installing that got them to 1FPS instead of crashing <.<)